The landscape of digital interaction has undergone a revolution with the rise of Large Language Models (LLMs) and Generative Engine Optimization (GEO or GES – Generative Engine Search). These technologies rely heavily on a powerful mathematical concept: vectors. Although invisible to most users, vectors are the backbone of how machines understand, generate, and optimize language. This article explores how vectors operate within LLMs and their pivotal role in GES, providing a clear picture of how modern search and generation systems function.

What Are Vectors?

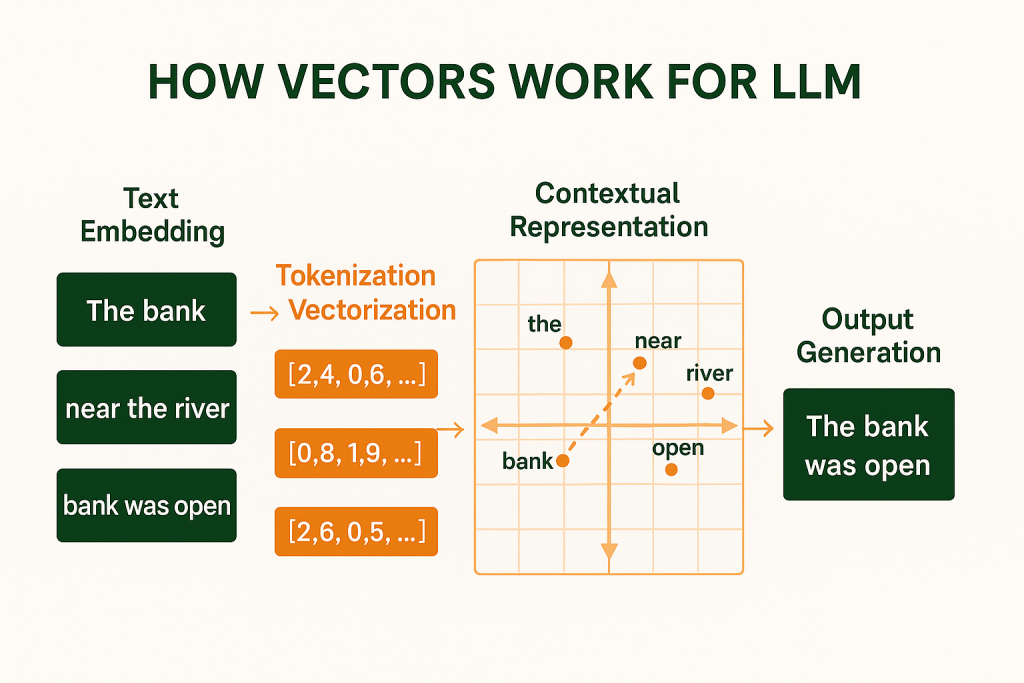

At their core, vectors are numerical representations of information. In the context of language models, vectors translate words, phrases, or even entire documents into a format that machines can process. Each vector exists in a high-dimensional space where proximity indicates semantic similarity. For example, the words “king” and “queen” may have vectors that are close together, while “king” and “banana” are far apart.

These vectors are not static; modern systems generate contextual embeddings, meaning that the same word can have different vectors based on its surrounding context.

Vectors in LLMs

1. Tokenization and Embedding

The first step in transforming text into vectors is tokenization, where a sentence is broken into smaller units (tokens). Each token is then mapped to an embedding vector via an embedding layer.

Example:

- Sentence: “Cats chase mice.”

- Tokens: [“Cats”, “chase”, “mice”]

- Vectors: [0.23, -1.54, 3.91…], [-0.47, 0.02, -0.94…], etc.

These embeddings capture semantic information derived from the model’s training data. Pretrained embedding layers hold millions of such vectors that evolve as training progresses.

2. Contextual Understanding with Transformers

LLMs, such as GPT, use transformer architectures where vectors are passed through layers that apply self-attention. This mechanism allows each word to update its vector based on other words in the sentence.

- “I went to the bank to deposit money” vs. “I sat on the river bank”: the word “bank” will have different vectors because the surrounding context alters its meaning.

This context-sensitive transformation is essential for nuanced language understanding.

3. Generation and Prediction

When generating language, LLMs predict the next word or token based on vector similarity. The model computes the likelihood of each potential token by comparing its vector to those in the current context.

- This prediction relies on operations like dot product or cosine similarity, which measure how closely aligned two vectors are in space.

Vectors and Semantic Search

Traditional search relied on exact keyword matching. However, vector-based semantic search identifies documents with meanings similar to the query, even if the exact words don’t match. This technique underpins Generative Engine Search (GES) or Generative Experience Optimization (GEO).

1. Embedding Queries and Documents

Both user queries and web content are converted into vectors. The engine then compares these vectors to find the closest matches.

- Query: “best affordable electric cars”

- Document A vector: close to query vector (talks about top-rated budget EVs)

- Document B vector: farther from query (talks about luxury EVs)

Only Document A appears high in results, even if it never uses the exact words “best affordable electric cars.”

2. Powering Zero-Click Results

With systems like Google’s AI Overviews or ChatGPT Search, LLMs use vectors to generate rich answers directly in the interface. They analyze documents with embeddings, synthesize relevant information, and deliver it instantly, minimizing the need for users to click through.

This represents a fundamental shift in search behaviour and demands that content creators optimize their content not just for keywords, but for semantic vector alignment.

Applications of Vectors in GEO

1. Intent Detection

Vectors allow LLMs to distinguish user intent by comparing the query’s vector to known intent categories. This is useful for serving the right format (e.g., article, product, FAQ).

2. Content Clustering

Documents with similar vectors can be grouped into clusters, representing topics or themes. This helps in:

- Content strategy planning

- Identifying content gaps

- Organizing information architecture

3. Link Relevance and Internal SEO

Internal linking strategies are being redefined by vector analysis:

- Pages with semantically similar vectors can be linked

- Prevents dilution of topical authority

- Enhances user journey through meaningful navigation

How to Optimize for Vectors and GES

1. Focus on Semantic Relevance

Write content that clearly addresses user needs and covers related topics thoroughly. Use natural language and answer questions as if speaking to a human.

2. EEAT Still Matters

Experience, Expertise, Authoritativeness, and Trustworthiness contribute to how vectors are evaluated. High-authority pages may have more influence in embedding spaces.

3. Use Structured Data

Structured data enhances vector interpretation by explicitly marking what content means (e.g., product, review, author).

4. Monitor Embedding-Based Tools

Use vector-based SEO tools like:

- OpenAI Embedding API

- Cohere

- Pinecone + LangChain

- Google’s AI Overviews and performance tools (if available)

These platforms allow testing how content is represented in vector space.

A Glimpse at the Future

With advancements in generative AI, vectors are increasingly becoming the currency of digital interaction. Content creators, marketers, and developers must understand and adapt to this shift.

Future innovations will likely include:

- Personalized vector spaces (e.g., customized search embeddings per user profile)

- Real-time vector updates based on engagement data

- Vector fingerprinting for plagiarism, reputation, and originality checks

Vectors form the unseen architecture of modern language processing. In LLMs, they represent meaning, enable contextual understanding, and drive text generation. In GEO and GES, they redefine how content is found, interpreted, and delivered. As the digital world transitions toward semantic, AI-driven experiences, understanding vectors is not just a technical curiosity it’s a competitive advantage.

Mastering the language of vectors means mastering the language of the future.